Two (worthwhile) ways of thinking

There are many ways to test our decision-making ability. What I propose is a simple riddle, but one that must be answered instinctively.

“In a lawn, there is a sod of grass; every day the sod doubles in size; it takes 48 days to cover the entire lawn. How many days does it take to cover half a lawn?”.

Who says 24?

….

Who says 96?

…

Who says 47?

The majority of people have the certainty of making decisions in a rational way, i.e. weighing the alternatives optimally, evaluating the pros and cons of each option in order to arrive at the most functional choice with respect to the set objective in a sustainable time.

If the option chosen was 47, this is probably the case.

If it was 24 or 96, it’s proof that instinct, at least at this juncture, was smarter than reason. What is too often underestimated is the fact that intuition leads astray. Systematically, recurrently, and predictably. As biases and heuristics teach.

Why is it so easy to make mistakes?

Proving this point, there are two personalities who are both strong and antithetical at the same time. Both grandchildren of Eastern European rabbis, sharing a deep interest in the way “people function in their normal states, practicing psychology as an exact science, and both searching for simple, powerful truths […], gifted with minds of shocking productivity”. Both Jewish atheists in Israel.”

Their names were Amos Tversky and the Nobel Prize-winning economist Daniel Kahneman.

Amos Tversky was optimistic and brilliant because “When you’re a pessimist and the bad thing happens, you experience it twice: once when you worry and the second time when it happens.” He was able to resonate about scientific conversations with experts in fields far removed from his own, but almost ethereal, intolerant of social conventions and metaphors: “They replace genuine uncertainty about the world with semantic ambiguity. A metaphor is a cover-up.”

Instead, Kahneman was born in Tel Aviv, and spent his childhood in Paris. In 1940, the German occupation put the family at risk. Hidden in the south of France, they managed to survive (with the exception of his father, who died of untreated diabetes). After the war, the rest of the family emigrated to Palestine.

If Tversky was a night owl, Kahneman is an early riser who often wakes up alarmed about something. He is prone to pessimism, claiming that by “expecting the worst, one is never disappointed.” This pessimism extends to the expectations he has for his research, which he likes to question, “I have a sense of discovery whenever I find a flaw in my thinking.”

Tversky liked to say, “People aren’t that complicated. Relationships between people are complicated.” But then he would stop and add, “Except for Danny.”

They were different, but anyone seeing them together, as they spent endless hours talking, knew that something special was happening, and they are credited with understanding why we make mistakes in making decisions.

Kahneman’s is an immense work, dedicated to his late colleague: in the end, it’s all about being slow or fast.

It’s all about being slow or fast

When it comes to thinking or making a decision, two systems are mobilized by the brain: system 1 (S1) and system 2 (S2), where S1 is intuitive, impulsive, loves to jump to conclusions, automatic, unconscious, fast and economical. S2, on the other hand, is conscious, deliberative, slow, often lazy, laborious to initiate, and reflective.

S1 and S2 don’t really exist, they are a handy analogy (or a label), which helps us understand what’s going on in our heads. For instance, it is thanks to S1 that we can quickly tie our shoes without really paying mental attention to the action itself or notice that an object is further away or closer than another, or even instantly intercept the fear on a person’s face and answer in a few moments the question: “What is the capital of France?”.

It is thanks to S2 if we can focus on the voice of a specific person in a noisy room full of people. If we can find our car in a crowded parking lot, dictate our phone number, fill out questionnaires, do math calculations and learn poems by heart. It would not be possible to perform complex tasks like these simultaneously. We can perform several actions together, but only if they are simple and require little mental effort.

Bias, heuristics and intuitions

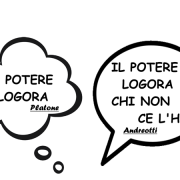

The two systems are both active during our waking period, but while the first one works automatically, the second one is placed in a mode where it can make the least amount of effort and only a small percentage of its capacity is used. Its order is to consume as few calories as possible.

Normally S2 follows S1’s advice, without making any changes. However, if System 1 is in trouble, he disturbs System 2 to help him analyze the information and suggest a solution to the problem. In the same way, when S2 realizes that his partner is making a mistake, he activates: for example, when you would like to insult the boss, but then something stops you. That something is System 2.

However, S2 is not always involved in the judgments of System 1 and this leads to error. How? Just like it happened with the riddle proposed in the opening of the article.

If it is indisputable that System 1 is at the origin of most of our errors (i.e. bias and heuristics), it is also true that it produces many “expert intuitions”, the automatic reflexes that are essential in our lives to make important decisions in a few fractions of a second. It’s thanks to System 1 if a surgeon in the operating room or a firefighter facing a fire can make life and death choices to deal with emergencies and very often make the right decision in those few moments.

The trouble is that S1 doesn’t know his own limits. He has a tendency to make unforgivable mistakes in assessing the statistical probability of an event. Generally using System 1 we underestimate the risk that rare events occur.

We generally underestimate the risk that rare catastrophic events will occur, while overestimating the probability that they will recur soon after these disasters have occurred. To cut the long story short, if on one side it helps us to take an infinite number of decisions, its rapidity generates errors, just because it doesn’t analyze the data at disposal in how much time that operation requires, and he prefers to jump to conclusions to show us quickly and effortlessly the way to act.

System 1 is therefore easily influenced. This is why, in order to prevent errors, but above all to protect people so that they do not end up shredded because of the volatility of their System 1 or the slowness of System 2, nudges are born; the need for a gentle push that Kahneman cites as the bible of behavioral economics “that directs people to make the right choice”.

Ci sono molti modi per mettere alla prova la nostra capacità decisionale, quello che propongo è un semplice indovinello, a cui occorre però rispondere d’istinto.

«In un prato c’è una zolla d’erba; ogni giorno la zolla raddoppia di dimensione; ci vogliono 48 giorni per coprire l’intero prato. Quanti giorni ci vogliono per coprire metà prato?».

Chi dice 24?

….

Chi dice 96

…

Chi dice 47

La maggioranza delle persone ha la certezza di prendere decisioni in modo razionale, ponderando cioè in modo ottimale le alternative, valutando pro e contro di ogni opzione per giungere in tempi sostenibili alla scelta più funzionale rispetto l’obiettivo prefissato.

Se l’opzione scelta è stata 47 probabilmente è così.

Se è stata 24 o 96 è la prova che l’istinto, almeno in questo frangente, è stato più scaltro della ragione.

Ciò che troppo spesso si sottovaluta è il fatto che l’intuizione porta fuori strada. In modo sistematico, ricorrente e prevedibile. Come bias ed euristiche insegnano.

Perché è così facile sbagliare?

A dimostrarlo, due personalità forti e antitetiche allo stesso tempo. Entrambi nipoti di rabbini dell’Europa dell’Est, con in comune l’interesse profondo per il modo in cui «le persone funzionano nei loro stati normali, praticano la psicologia come una scienza esatta, ed entrambi alla ricerca di verità semplici e forti […], dotati di menti di sconvolgente produttività. Entrambi ebrei atei in Israele».

Si chiamavano Amos Tversky e il premio Nobel per l’economia Daniel Kahneman.

Amos Tversky era ottimista e geniale perché: «Quando sei un pessimista e la cosa brutta accade, la vivi due volte: una volta quando ti preoccupi e la seconda volta quando succede». Capace di illuminare conversazioniscientifiche con esperti di settori lontani dal proprio, ma quasi etereo, insofferente alle convenzioni sociali e alle metafore: «Sostituiscono l’autentica incertezza sul mondo con ambiguità semantica. Una metafora è un insabbiamento».

Kahneman nasce invece a Tel Aviv, trascorre l’infanzia a Parigi. Nel 1940, l’occupazione tedesca mette a rischio la famiglia. Nascosti nel sud della Francia, riescono a sopravvivere (ad eccezione del padre, morto a causa del diabete non trattato). Dopo la guerra, il resto della famiglia emigra in Palestina.

Se Tversky era un nottambulo, Kahneman è un mattiniero che si sveglia spesso allarmato per qualcosa. È incline al pessimismo, sostenendo che «aspettandosi il peggio, non è mai deluso». Questo pessimismo si estende alle aspettative che ha per la sua ricerca, che gli piace mettere in discussione: «Ho il senso della scoperta ogni volta che trovo un difetto nel mio modo di pensare».

A Tversky piaceva dire: «Le persone non sono così complicate. Le relazioni tra le persone sono complicate». Ma poi si fermava e aggiungeva «Tranne Danny».

Erano diversi, ma chi li vedeva insieme mentre trascorrevano infinite ore a parlare, sapeva che accadeva qualcosa di speciale ed è a loro che si deve il merito di aver capito il perché sbagliamo nel prendere decisioni.

Quello di Kahneman è un lavoro immenso, dedicato al collega scomparso: alla fine è tutta una questione di lentezza o di velocità.

È tutta una questione di lentezza o velocità

Quando si tratta di pensare o di prendere una decisione si mobilitano nel cervello due sistemi: il sistema 1 (S1) e il sistema 2 (S2), dove S1 è intuitivo, impulsivo, adora saltare alle conclusioni, automatico, inconscio, veloce ed economico. S2 è invece consapevole, deliberativo, lento, spesso pigro, faticoso da avviare e riflessivo.

S1 e S2 non esistono nella realtà, sono una pratica analogia (un’etichetta), che ci aiuta a capire ciò che accade nella nostra testa. Per esempio, è grazie a S1 se riusciamo a completare velocemente e senza pensarci la frase «rosso di sera…», ad allacciarci le scarpe senza veramente porre attenzione mentale all’azione stessa, notare che un oggetto è più lontano o vicino di un altro o ancora intercettare istantaneamente la paura sul volto di una persona e rispondere in pochi istanti alla domanda: «Qual è capitale della Francia?».

È merito di S2 se riusciamo a concentrarci sulla voce di una persona specifica in una stanza rumorosa e piena di gente. Se riusciamo a trovare la nostra macchina in un parcheggio affollato, a dettare il nostro numero di telefono, a compilare dei questionari, a fare dei calcoli matematici e a imparare poesie a memoria. Non sarebbe possibile svolgere compiti complessi come questi simultaneamente. Possiamo compiere più azioni insieme, ma solo se sono semplici e se richiedono scarso sforzo mentale.

Bias, euristiche e intuizioni

I due sistemi sono entrambi attivi durante il nostro periodo di veglia, ma se il primo funziona in modo automatico, il secondo si posiziona in una modalità in cui può fare il minimo sforzo e solo una piccola percentuale delle sue capacità viene utilizzata. Il suo Diktat è consumare meno calorie possibili.

Normalmente S2 segue i consigli di S1, senza apportare modifiche. Se però il Sistema 1 è in difficoltà, disturba il Sistema 2 affinché lo aiuti ad analizzare le informazioni e suggerisca una soluzione al problema. Allo stesso modo S2, quando si accorge che il suo compagno sta prendendo una cantonata, si attiva: per esempio quando vorresti insultare il capo, ma poi qualcosa ti blocca. Quel qualcosa è il Sistema 2.

Non sempre però S2 viene coinvolto nei giudizi del Sistema 1 e questo porta all’errore. Come? Proprio come è accaduto con l’indovinello proposto in apertura dell’articolo.

Se è indiscutibile che il Sistema 1 è all’origine della maggior parte dei nostri errori (ossia bias ed euristiche) è anche vero che produce tante “intuizioni esperte”, i riflessi automatici che sono essenziali nella nostra vita, per prendere decisioni importanti in poche frazioni di secondo.

Un chirurgo in sala operatoria o un vigile del fuoco di fronte a un incendio, grazie al Sistema 1 fanno scelte di vita e di morte per affrontare delle emergenze e molto spesso prendono la decisione giusta in quei pochi attimi. Il guaio è che S1 non conosce i propri limiti. Ha la tendenza a fare degli errori imperdonabili nella valutazione delle probabilità statistiche di un evento.

Generalmente usando il Sistema 1 sottovalutiamo il rischio che avvengano eventi rari di tipo catastrofico; salvo invece sovrastimare la probabilità che si ripresentino subito dopo che questi disastri sono accaduti.

Insomma, se da un lato ci aiuta a prendere un numero infinito di decisioni, la sua rapidità genera errori, proprio perché non analizza i dati a disposizione, in quanto questa operazione richiede tempo e lui preferisce saltare a conclusioni per indicarci celermente e senza sforzo la strada da prendere.

Il Sistema 1 è dunque facilmente influenzabile. Ecco che, per prevenire gli errori, ma soprattutto per proteggere le persone affinché non finiscano triturate a causa della volatilità del loro Sistema 1 o della lentezza del Sistema 2 nascono i nudge; la necessità di una spinta gentile che Kahneman cita come la bibbia dell’economia comportamentale «che indirizzi a fare la scelta giusta».

Sources

Lewis M., A Nobel friendship. Kahneman and Tversky, the meeting that changed the way we think, Raffaello Cortina Editore, Milan, 2017 pp. 165-166

Stanovich K., West R., Individual differences in reasoning: Implications for the rationality debate?, Behavioral and brain sciences (2000) 23, 645-726 http://pages.ucsd.edu/~mckenzie/StanovichBBS.pdf

Kahneman D., Slow and fast thinking, Mondadori, Milan 2016, p. 23.